BY YASEL COSTA

Professor at the MIT-Zaragoza International Logistics Program

Over the past three hundred years, successive waves of fundamental technological and industrial development have completely transformed societies at every level and in every country. And while in the long term whole populations have become, in absolute terms, better fed, healthier, longer living and able to access at least some of life’s pleasures and luxuries, it is undeniable that the fairly slow pace of each change has left too many people feeling relatively marginalised, disempowered and insecure.

While in hindsight each wave of development has created greater, less onerous and better-rewarded employment opportunities, in real time, too often, the immediate experience has been one of de-skilling, reduced wages, under-employment and the loss of dignity that brings. Transformation, while relentless, has certainly not been customer-centric, and not infrequently, this has elicited a violent reaction, from the breaking of power looms two hundred years ago to revolutions in current times.

Nobody planned this — indeed, with the arguable exception of the ”Electrification of the Soviet Union”, the social consequences of technological change have never been planned or foreseen. But as we enter a fourth, and some would say fifth, Industrial Revolution, we have the opportunity, indeed the duty, to manage ever more rapid change better by putting the customer, i.e., the people, first.

Another century, another revolution

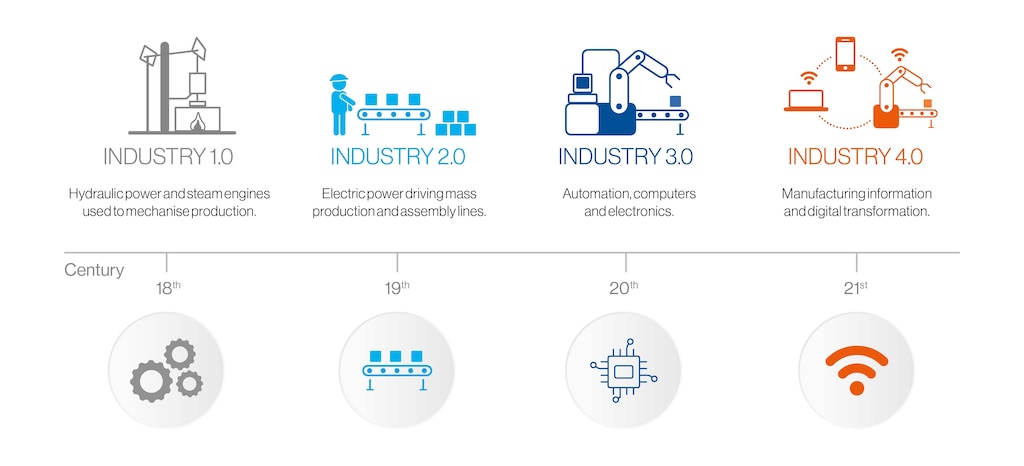

As every schoolchild knows, the First Industrial Revolution, from the second half of the 18th century onwards, saw the application of mechanical power (first water power, then steam) to tasks previously dependent on human or animal muscle, enabling the rise of the factory system. Starting in the textile industry, skilled hand spinners and then weavers were replaced by minders of machines. The approach rapidly extended to other industries, many of which, such as machine building, were created by the revolution itself.

A hundred years later, a second revolution saw the application of electricity. This allowed power to be used at a distance, even a considerable distance, from where it was generated. This enabled, at one level, the development of assembly line production and mechanical or hard automation; at another, it made it possible to locate industry close to markets rather than coalfields.

A third revolutionary phase, starting with the Second World War and continuing to the present day, has been the wholesale adoption of electro-mechanical (think of the old jukebox) and then electronically programmable automation, progressively exploiting new developments from the earliest programmable logic controllers to the latest IT innovations. Unlike earlier revolutions, this impacted not only manufacturing and other physical operations, but also business processes — in design, procurement, finance, administration and everywhere else — as IT enables vast amounts of data to be gathered, manipulated and exchanged.

We have created a digital, or virtual, world. But note that, so far, the machines are, to a large extent, only doing what used to be done, or could in theory have been done, by human workers. They have simply automated human ways of operation and human styles of organisation. They do this faster, more reliably, less dangerously and, often, more economically — but where the economics don’t stack up, we still can and do use people.

To some extent, this division into separate industrial revolutions is an academic conceit, rather like the Stone, Bronze and Iron Ages. Just as people did not stop knapping flints because someone had invented a copper/tin alloy, so commercial handloom weaving survived even in industrialised countries into the 1960s and 70s; conversely, the Jacquard punch card system for “programming” a weaving frame (1804) would have been familiar to mainframe programmers in the 1970s. Much of our current industrial technology will likewise remain relevant for decades to come. Nonetheless, leading companies and industrial/commercial sectors are now well embarked on what is becoming known as Industry 4.0 (after a German government study of similar name a few years ago), and this time, it is different.

Cyber-physical systems

Besides Industry 4.0, the new paradigm goes under other names: some talk of cyber-physical systems, while the analyst Gartner identifies hyperautomation. Whatever the term, the distinction is that we are no longer looking at recognisably traditional processes and operations that are monitored, controlled and driven by computational power that, however advanced, has been designed to mimic human capabilities. Rather, the digital and physical elements form a single entity, and the question is not “how can the machine do this human task better?”, but “how would the machine best go about achieving this goal?” And that may involve ways of operating and working that are not even conceptually capable of being performed by people.

This involves some powerful base technologies that have matured over the past few years. The best known is the Internet of Things, which, at its simplest, means that every object in the system — every machine, sensor, tool, component, even in the limit every person — is addressable: it can be spoken to, and it can speak back. Then there is big data, which is the ability to assemble and work with truly mind-blowing volumes of data, and the analytics capability to find and evaluate deep patterns and meanings in this data while working with incomplete or uncertain information. Cloud computing makes it feasible to combine, work on and share data from any source. All of this can happen in real time, give or take the odd microsecond.

The growing sciences of artificial intelligence (AI) and machine learning mean that these cyber-physical systems can increasingly find their own route to the desired goal — they are not constrained to a path that is predetermined because ”that’s how a human would approach the task.”

Intelligence, for we can call it no less, will be incorporated to a greater or lesser degree at every stage. So, in manufacturing, there will be a smart supply chain that is constantly predicting and adapting to changing circumstances, feeding smart manufacturing processes and working practices that are self-monitoring, self-correcting and self-organising. Likewise, it will feed into distribution systems that are similarly predictive and proactive. And, of course, many of the products manufactured will also have smart capabilities — in some cases, continuing to ”report back” in service to inform and adjust the supply and manufacturing processes.

The result will not be the islands of automation typical of current industrial operations, but a high degree of autonomy, potentially across not just the whole manufacturing process but the whole product life cycle. For example, an appliance might book itself in for maintenance, or decide, given current costs, that it is life-expired and order its own replacement while arranging its own disposal, optimised for current scrap prices or minimised environmental impact.

What’s the problem?

All this sounds very disruptive (certainly true) and very expensive (also true although probably not as much as might be thought). Does the world need Industry 4.0?

Undoubtedly. As has been made clear in recent months, the world is an uncertain place and is growing more so. Human beings are not very good at making decisions in uncertain, probabilistic environments. It used to be that computers were even worse — they could only do what they were programmed to do, and even then, only with a complete set of data. But that is no longer the case. They can spot hidden trends, assess probabilities without bias and find near-optimal solutions even in the absence of the full picture. They are not bound by sequential, decision-tree thinking and problem solving. Crucially, they can reconcile competing goals in a way that people find hard or impossible — as a simple example, in transport planning, the reconciliation of cost, time and environmental impact.

Manufacturing and distribution businesses have to become ever more agile, often with seriously constrained resources. Consumer requirements change rapidly and unpredictably, but, as we have seen recently, so do supply markets and internal resources such as labour. And externalities such as environmental and social impacts now have to be internalised, whether by law or by customer demand. A business world that was already often too complex for human analysis is getting more so, and yet if we are to continue to supply people with even life’s essentials, let alone its luxuries, we need to have efficient and effective ways of working in this environment.

The technology road map

Delivering Industry 4.0 requires significant advances across a broad front. Many of the building blocks are already in place but are interdependent, and advances in one area depend critically on developments in others.

- Digitisation: the conversion or representation of material objects and actions into digital forms capable of processing by IT systems.

- Autonomisation: the process of providing equipment with the intelligence and learning attributes it requires to be able to make and act on its own decisions where appropriate with minimal human intervention.

- Transparency: all elements, whether human or cyber, have real-time visibility of the same version of the truth and what other elements are doing or proposing to do as a result; the paradigm is blockchain.

- Mobility: this relates to IT and communication devices but also to physical operations; just as the second electrical revolution meant that machinery no longer had to be within a line shaft’s length of the steam engine, cyber-enabled additive manufacturing (e.g., 3D printing) could move production close to points of need while reducing the requirement for large-scale investment in fixed assets.

- Modularisation: the increased use of standardised components and subsystems in different (and reconfigurable) combinations to achieve different objectives.

Two further areas for development are as much social as technological: Network Collaboration, and Socialisation. These require humans and machines to collaborate in the same specific networks and on the same tasks. Moreover, that collaboration must be “socialised” through two-way conversation: not just the human programming the machine or merely following the computer’s instructions, but true interaction.

Ultimately, this would mean that, in a warehouse or distribution centre for example, the lorries, handling equipment, containers, shelves and bins — in addition to the human workforce (and, sometimes, the products being stored and handled) — have their own degrees of autonomy but act collaboratively within a common network and with common visibility to which they are all contributing. This may be represented as a digital twin hosted in the cloud.

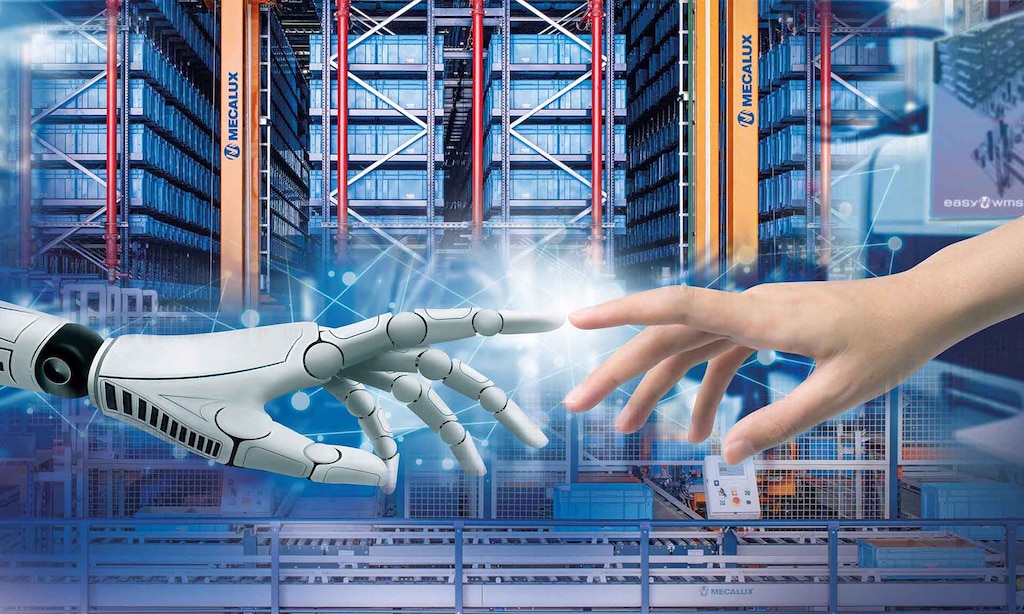

This approach applies in equal measure to physical operations and to business processes. In the physical realm, we are talking about collaborative robots — cobots — an autonomous workforce that is perceptive and informed about human intentions and desires, that cannot just respond to the current situation but can predict and anticipate. Cobots will have “the human touch”, both literally, through advances in vision systems and haptics (technologies that enable robots to touch and feel), and metaphorically, in the ways they interact with their human co-workers.

Some of the enabling technologies are a little scary and may meet resistance. For example, functional near-infrared spectroscopy (fNIRS) would allow the robot to directly sense and act on its human co-worker’s brain activity.

On a more positive note, digitisation will mean that both cobots and human workers can be fully trained in their tasks and interactions offline, without risk to health or production. Advances in sensing technologies, AI and deep learning will mean that cobots learn both by watching and by doing — much like an apprentice does — and, being digital, that learning can be transferred. Furthermore, whereas for humans there tends to be an upper limit beyond which further experience does not significantly improve performance, the cobot can continue to improve without becoming saturated with performance data.

An analogy for how a cobot would work with a human might be that of the operating theatre nurse and the surgeon. A good operating theatre nurse knows not only the requirements of the procedure, but the preferences of the surgeon, how he or she will respond to the unexpected and what the new requirements are likely to be, so that the right instrument is always at hand. Of course, the nurse performs many other tasks as well, including what one might call condition monitoring and alerting — again the sort of area in which a cobot might be supporting a human operator. It is no coincidence that surgery is, literally, at the cutting edge of robotic science and technology.

Rage against the machine

Technologically, all this is doable and indeed is being done. Whether it can be implemented in a manner that is socially and environmentally acceptable, or whether we are doomed to repeat the mistakes of previous industrial revolutions, is a different question.

Many people are understandably scared of the social implications of Industry 4.0 if unconstrained and are stressing the need to plan, for the first time, ways in which the latest industrial revolution can benefit (or at least not disadvantage) all the people now, rather than most of the people in a generation’s time. Indeed, several authors — notably, Saeid Nahavandi of Deakin University, Australia — are already advocating Industry 5.0 to actively ensure a human-centric solution whereby robots (broadly defined) “are intertwined with the human brain and work as collaborator instead of competitor.” The aim, in other words, is to ensure that human-friendly network collaboration and socialisation, as outlined above, actually come to pass.

Environmentally, this new industrial revolution bears the potential to yield considerable gains in our use of resources, eliminating many sources of waste in everything from raw materials to transport. There is, however, a fly in the ointment in the use of information technology itself.

Environmentally, this new industrial revolution bears the potential to improve resource management and eliminate waste

IT is a huge consumer of power and of clean water. Ireland hosts over 70 large data centres, each using about 500,000 litres of water a day, which created water shortages last summer. The EU Green Deal calls for data centres to be carbon neutral by 2030, but that may be optimistic. In the UK (admittedly, no longer in the EU), servers already consume more than 12% of electricity generated. Iceland’s green hydro and geothermal capacity is being stretched to the limit by bitcoin mining. The latter accounts for an extreme usage, but blockchain is not generally energy-light.

By comparison, the brightest human brain, performing tasks that are beyond current computing abilities, is rated at around 20 W — in light-bulb terms, we are all pretty dim. We will need to consider whether every problem that can be solved by computer should be, and in particular, system designers need to favour edge computing over the mass transfer of data to and from remote servers and data centres. So, while individual autonomous devices need to see what decisions other system elements are making, they do not need to see the raw data behind those decisions, and they should by and large be making their own choices locally.

The social implications of Industry 4.0/5.0 are harder to call. Some technologies may not be psychologically acceptable — many (though certainly not all) warehouse workers actively dislike the feeling that they are being “ordered around” by a machine. How acceptable, then, would a direct fNIRS brain-to-computer feed be, in a world where too many people believe that Covid jabs are microchipped?

In a different vein, it remains to be seen how acceptable autonomous self-driving goods vehicles will be on public roads, even if they can be objectively shown to be safer. And if they are still going to require, not a driver, but an attendant, then what economic or labour problem has been solved?

But inevitably, the effects on jobs are top-of-mind for many people. The past is not an entirely reliable guide to the future, as your stockbroker will tell you. Nonetheless, every previous industrial revolution has vastly increased the demand for workers and, overall, for more skilled and better paid workers (even the appalling conditions of 19th century factories were made possible because conditions were still seen to be better than the previous agricultural alternative).

Additionally, across the developed world and elsewhere, there is actually a labour shortage. Birth rates in many countries are consistently below the natural replacement rates, while the older generation is living much longer into retirement. Moreover, many more young people are postponing their entry into the workforce as they pursue further educational opportunities. Even with a world economy yet to fully recover from Covid, there are shortages — in the US and Europe alone — at every level, from warehouse operators to lorry drivers to engineers and technicians. Any technological advances that can make more productive and rewarding use of a constrained labour force is to be welcomed.

There will be new jobs in abundance, but they will not, of course, be like-for-like replacements for the old. Serious commitment to retraining, by government and industry, is required.

Location is another factor, and in previous revolutions, old centres of activity and their people have often been left high and dry as industry relocated. That need not be the case this time: mobile technologies, 3D printing and other advances should free business from commitment to fixed and immovable buildings, production lines, material and energy sources, making it possible to bring manufacturing and other jobs to the people rather than forcing the people to move. That may also reduce economic overdependence on a few major conurbations, spreading prosperity more evenly and easing some of the logistical and environmental problems of very large cities.

Likewise, not all jobs should be automated just because they can be. Take two pick-face examples from e-commerce grocery. It is perfectly possible to build and train a robot, with vision and haptic systems, to pick and handle a single ripe mango. We can retrain it to do the same for guava. But that is a massive investment to perform a task that a teenager can learn in under a minute. Why would you do so?

Then again, there is the problem of substitutions. Many grocers will pick an alternative choice if the customer’s original requirement is not available. But the results are often hilariously inappropriate. In theory, we could use machine learning to make ever better choices. However, not only may there not be any underlying logic to the customer’s choice of replacement (e.g., the item is not part of the list of recipe ingredients represented by the rest of the shop), but there is very rarely any feedback to the company about unsuitable substitutions. The machine has no data to learn from; the order picker’s guess is as good as it gets (and probably better than many crude algorithms).

Transformation on the horizon

Industry 4.0 is upon us, and it will happen more quickly than we expect or than previous transformations. If the people and their governments, industries and technologists make the decision that they want this to be the first human-centric industrial revolution, we have immense opportunities for benefits right across society and the globe. If we choose not to give the technology a steer in the right direction, we risk immense damage to our people and our planet.

Dr. Yasel Costa is a Professor of SCM and the Director of both the PhD Program and the PhD Summer Academy at the MIT-Zaragoza International Logistics Program.